Replicate

Run, Deploy & Scale Open-Source AI Models Instantly with APIs

ISO/IEC 27001:2022 (infrastructure-aligned)

ISO/IEC 27001:2022 (infrastructure-aligned)

Product Description

Replicate is a developer-focused AI platform that makes it easy to run, deploy, and scale open-source machine learning models through simple APIs. Instead of managing complex infrastructure, GPUs, or model packaging, teams can instantly use state-of-the-art models for image generation, video processing, audio synthesis, speech recognition, text generation, and more. Replicate hosts a large ecosystem of popular open-source models, including Stable Diffusion, Whisper, LLaMA-based models, and cutting-edge research projects. Developers can run models on demand, deploy custom models from GitHub, version them, and scale inference automatically. Replicate handles compute provisioning, performance optimization, and reliability behind the scenes. With AiDOOS, Replicate becomes a production-ready AI deployment engine. AiDOOS manages model selection, deployment architecture, cost optimization, prompt and parameter tuning, workflow orchestration, and integration with applications, data pipelines, and business systems. AiDOOS also supports MLOps best practices such as monitoring, version control, testing, and governance. Together, Replicate + AiDOOS empower teams to experiment quickly, deploy AI features faster, and scale machine learning capabilities without operational overhead.

From Challenge to Success

See the transformation in action

Challenge

Results

Features

Core Functions at a Glance

Hosted Open-Source Models

Run popular AI models instantly

Faster experimentation

Custom Model Deployment

Deploy models directly from GitHub

Simplified production rollout

Auto-Scaling Inference

Scale GPU workloads on demand

Reliable performance

Versioning & Reproducibility

Track and manage model versions

Safer deployments

Simple, Developer-Friendly APIs

Integrate AI in minutes

Faster product development

Understand the Value Behind Each Capability.

Schedule a MeetingReal-World Use Cases

See how teams drive results across industries

Integrations

Seamlessly connect with your entire tech ecosystem

Pricing, TCO & ROI

Request a meeting to discuss Replicate's pricing.

Schedule a MeetingCustomer Success Stories

Real results from real customers

VisionForge Studio

SoundWave AI

Security, Compliance & Reliability

Enterprise-grade security you can trust

Implementation with AiDOOS

Outcome-based delivery with expert support

Delivery Model

Implementation Timeline

See How It Works for Your Team.

Schedule a MeetingAlternatives & Comparisons

Find the perfect fit for your needs

| Capability | Replicate | It'sAlive Chatbot | Ludis Analytics Da | MyEssayWriter.AI |

|---|---|---|---|---|

| Customization | ||||

| Ease of Use | ||||

| Enterprise Features | ||||

| Pricing | ||||

| Integration Ecosystem | ||||

| Mobile Experience | ||||

| AI & Analytics | ||||

| Quick Setup |

Explore Alternative Products

Compare and choose the best CRM solution for your business

It'sAlive Chatbot Builder

Deploy Facebook Chatbots with ItsAlive + AiDOOS | Smart Customer Engagement Create intelligent Messe

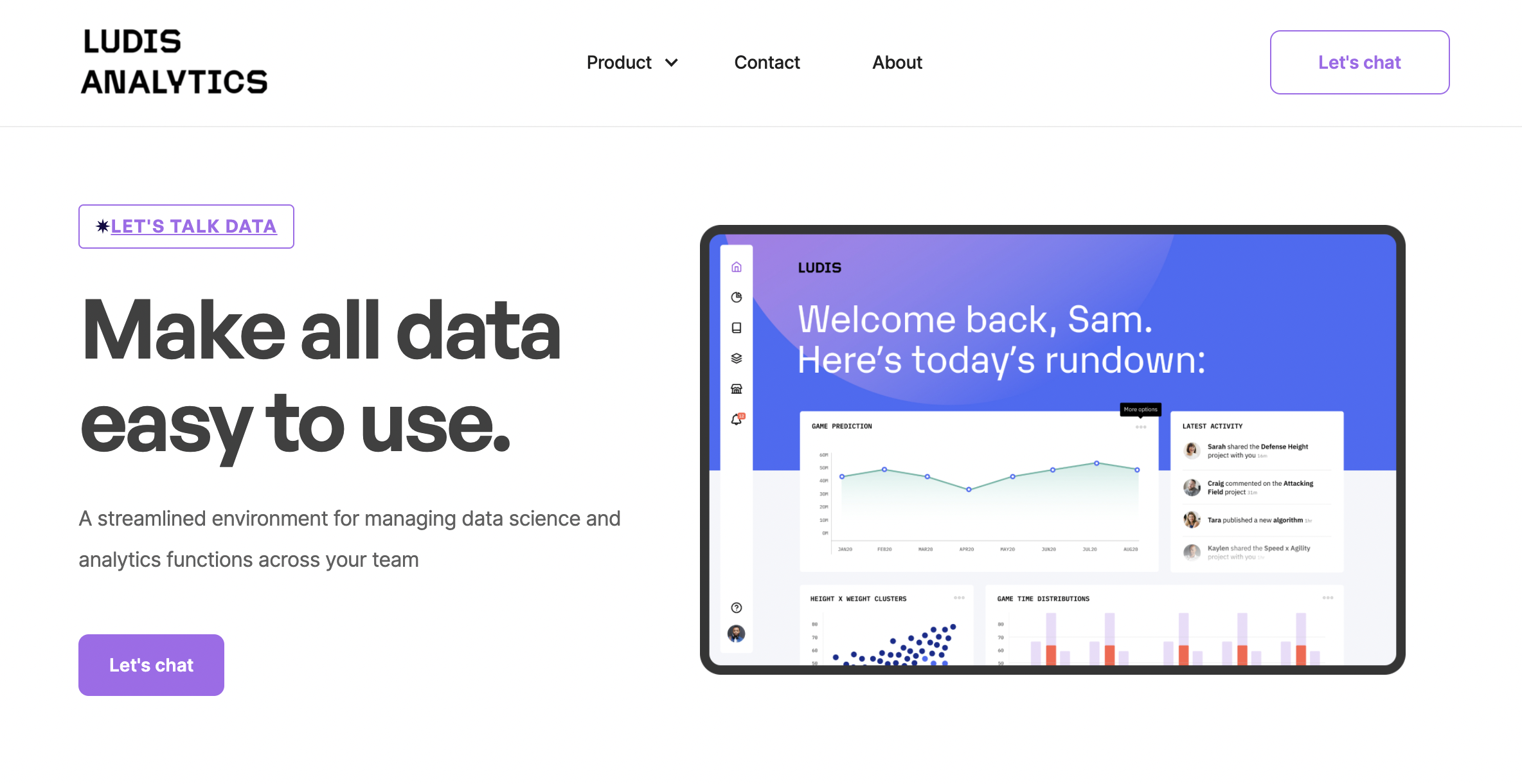

Ludis Analytics Data and Insights Platform

Ludis: Unified Data Platform for Seamless Ingestion, Transformation, and Visualization Ludis is an a

MyEssayWriter.AI

Transform Your Writing Workflow with myessaywriter.ai Unlock seamless essay creation and elevate you

Screenshots & Video Gallery

See Replicate in action

Frequently Asked Questions

Everything you need to know